Blog

Updates and insights on data ownership in the AI era — exploring how permission builds a safer, more transparent, and rewarding digital future.

Recent Articles

Parenting In the Age of AI: Why Tech Is Making Parenting Harder – and What Parents Can Do

Many parents sense a shift in their children’s environment but can’t quite put their finger on it.

Children aren't just using technology. Conversations, friendships, and identity formation are increasingly taking place online - across platforms that most parents neither grew up with nor fully understand.

Many parents feel one step behind and question: How do I raise my child in a tech world that evolves faster than I can keep up with?

Why Parenting Feels Harder in the Digital Age

Technology today is not static. AI-driven and personalized platforms adapt faster than families can.

Parents want to raise their children to live healthy, grounded lives without becoming controlling or disconnected. Yet, many parents describe feeling:

- “Outpaced by the evolution of AI and Algorithms”

- “Disconnected from their children's digital lives”

- “Concerned about safety when AI becomes a companion”

- “Frustrated with insufficient traditional parental controls”

Research shows this shift clearly:

- 66% of parents say parenting is harder today than 20 years ago, citing technology as a key factor.

- Reddit discussions reveal how parents experience a “nostalgia gap,” in which their own childhoods do not resemble the digital worlds their children inhabit.

- 86% of parents set rules around screen use, yet only about 20% follow these rules consistently, highlighting ongoing tension in managing children’s device use.

Together, these findings suggest that while parents are trying to manage technology, the tools and strategies available to them haven’t kept pace with how fast digital environments evolve.

Technology has made parenting harder.

The Pressure Parents Face Managing Technology

Parents are repeatedly being told that managing their children's digital exposure is their responsibility.

The message is subtle but persistent: if something goes wrong, it’s because “you didn’t do enough.”

This gatekeeper role is an unreasonable expectation. Children’s online lives are always within reach, embedded in education, friendships, entertainment, and creativity. Expecting parents to take full control overlooks the reality of modern childhood, where digital life is constant and unavoidable.

This expectation often creates chronic emotional and somatic guilt for parents. At the same time, AI-driven platforms are continuously optimized to increase engagement in ways parents simply cannot realistically counter.

As licensed clinical social worker Stephen Hanmer D'Eliía explains in The Attention Wound: What the attention economy extracts and what the body cannot surrender, "the guilt is by design." Attention-driven systems are engineered to overstimulate users and erode self-regulation (for children and adults alike). Parents experience the same nervous-system overload as their kids, while lacking the benefit of growing up with these systems. These outcomes reflect system design, not parental neglect.

Ongoing Reddit threads confirm this reality. Parents describe feeling behind and uncertain about how to guide their children through digital environments they are still learning to understand themselves. These discussions highlight the emotional and cognitive toll that rapidly evolving technology places on families.

Parenting In A Digital World That Looks Nothing Like The One We Grew Up In

Many parents instinctively reach for their own childhoods as a reference point but quickly realize that comparison no longer works in today’s world. Adults remember life before smartphones; children born into constant digital stimulation have no such baseline.

Indeed, “we played outside all day” no longer reflects the reality of the world children are growing up in today. Playgrounds are now digital. Friendships, humor, and creativity increasingly unfold online.

This gap leaves parents feeling unqualified. Guidance feels harder when the environment is foreign, especially when society expects and insists you know how.

Children Are Relying on Chatbots for Emotional Support Over Parents

AI has crossed a threshold: from tool to companion.

Children are increasingly turning to chatbots for conversation and emotional support, often in private.

About one-in-ten parents with children ages 5-12 report that their children use AI chatbots like ChatGPT or Gemini. They ask personal questions, share worries, and seek guidance on topics they feel hesitant to discuss with adults.

Many parents fear that their child may rely on AI first instead of coming to them. Psychologists warn that this shift is significant because AI is designed to be endlessly available and instantly responsive (ParentMap, 2025).

Risks include:

- Exposure to misinformation.

- Emotional dependency on systems that can simulate care but cannot truly understand or respond responsibly.

- Blurred boundaries between human relationships and machine interaction.

Reporting suggests children are forming emotionally meaningful relationships with AI systems faster than families, schools, and safeguards can adapt (Guardian, 2025; After Babel, 2025b).

Unlike traditional tools, AI chatbots are built for constant availability and emotional responsiveness, which can blur boundaries for children still developing judgment and self-regulation — and may unintentionally mirror, amplify, or reinforce negative emotions instead of providing the perspective and limits that human relationships offer.

Why Traditional Parental Controls are Failing

Traditional parental controls were built for an “earlier internet,” one where parents could see and manage their children online. Today’s internet is algorithmic.

Algorithmic platforms bypass parental oversight by design. Interventions like removing screens or setting limits often increase conflict, secrecy, and addictive behaviors rather than teaching self-regulation or guiding children on how to navigate digital spaces safely (Pew Research, 2025; r/Parenting, 2025).

A 2021 JAMA Network study found video platforms popular with kids use algorithms to recommend content based on what keeps children engaged, rather than parental approval. Even when children start with neutral searches, the system can quickly surface videos or posts that are more exciting. These algorithms continuously adapt to a child’s behavior, creating personalized “rabbit holes” of content that change faster than any screen-time limit or parental control can manage.

Even the most widely used parental control tools illustrate this limitation in practice, focusing on:

- reacting after exposure (Bark)

- protecting against external risks (Aura)

- limiting access (Qustodio)

- tracking physical location (Life360)

What they largely miss is visibility into the algorithmic systems and personalized feeds that actively shape children’s digital experiences in real time.

A Better Approach to Parenting in the Digital Age

In a world where AI evolves faster than families can keep up, more restrictions won’t solve the disconnection between parents and children. Parents need tools and strategies that help them stay informed and engaged in environments they cannot fully see or control.

Some companies, like Permission, focus on translating digital activity into clear insights, helping parents notice patterns, understand context, and respond thoughtfully without prying.

Raising children in a world where AI moves faster than we can keep up is about staying present, understanding the systems shaping children’s digital lives, and strengthening the human connection that no algorithm can replicate.

What Parents Can Do in a Rapidly Changing Digital World

While no single tool or rule can solve these challenges, many parents ask what actually helps in practice.

Below are some of the most common questions parents raise — and approaches that research and lived experience suggest can make a difference.

Do parents need to fully understand every app, platform, or AI tool their child uses?

No. Trying to keep up with every platform or feature often increases stress without improving outcomes.

What matters more is understanding patterns: how digital use fits into a child’s routines, moods, sleep, and social life over time. Parents don’t need perfect visibility into everything their child does online; they need enough context to notice meaningful changes and respond thoughtfully.

What should parents think about AI tools and chatbots used by kids?

AI tools introduce a new dynamic because they are:

- always available

- highly responsive

- designed to simulate conversation and support

This matters because children may turn to these tools privately, for curiosity, comfort, or companionship. Rather than reacting only to the technology itself, parents benefit from understanding how and why their child is using AI, and having age-appropriate conversations about boundaries, trust, and reliance.

How can parents stay involved without constant monitoring or conflict?

Parents are most effective when they can:

- notice meaningful shifts early

- understand context before reacting

- talk through digital choices rather than enforce rules after the fact

This shifts digital parenting from surveillance to guidance. When children feel supported rather than watched, conversations tend to be more open, and conflict is reduced.

What kinds of tools actually support parents in this environment?

Tools that focus on insight rather than alerts, and patterns rather than isolated moments, are often more helpful than tools that simply report activity after something goes wrong.

Some approaches — including platforms like Permission — are designed to translate digital activity into understandable context, helping parents notice trends, ask better questions, and stay connected without hovering. The goal is to support parenting decisions, not replace them.

The Bigger Picture

Parenting in the age of AI isn’t about total control, and it isn’t about stepping back entirely.

It’s about helping kids:

- develop judgment

- understand digital influence

- build healthy habits

- stay grounded in human relationships

As technology continues to evolve, the most durable form of online safety comes from understanding, trust, and connection — not from trying to surveil or outpace every new system.

How You Earn with the Permission Agent

The Permission Agent was built to do more than sit in your browser.

It was designed to work for you: spotting opportunities, handling actions on your behalf, and making it super easy to earn rewards as part of your everyday internet use.

Here’s how earning works with the Permission Agent.

Earning Happens Through the Agent

Earning with Permission is powered by Agent-delivered actions designed to support the growth of the Permission ecosystem.

Rewards come through Rewarded Actions and Quick Earns, surfaced directly inside the Agent. When you use the Agent regularly, you’ll see clear, opt-in earning opportunities presented to you.

Importantly, earning is no longer based on passive browsing. Instead, opportunities are delivered intentionally through actions you choose to participate in, with rewards disclosed upfront.

You don’t need to search for offers or manage complex workflows. The Agent organizes opportunities and helps carry out the work for you.

Daily use is how you discover what’s available.

Rewarded Actions and Quick Earns

Rewarded Actions and Quick Earns are the primary ways users earn ASK through the Agent.

These opportunities may include:

- Supporting Permission launches and initiatives

- Participating in community programs or campaigns

- Sharing Permission through guided promotional actions

- Taking part in contests or time-bound promotions

All opportunities are presented clearly through the Agent, participation is always optional, and rewards are transparent.

The Agent Does the Work

What makes earning different with Permission is the Agent itself.

You choose which actions to participate in, and the Agent handles execution - reducing friction while keeping you in control. Instead of completing repetitive steps manually, the Agent performs guided tasks on your behalf, including mechanics behind promotions and referrals.

The result: earning ASK feels lightweight and natural because the Agent handles the busywork.

The more consistently you use the Agent, the more opportunities you’ll see.

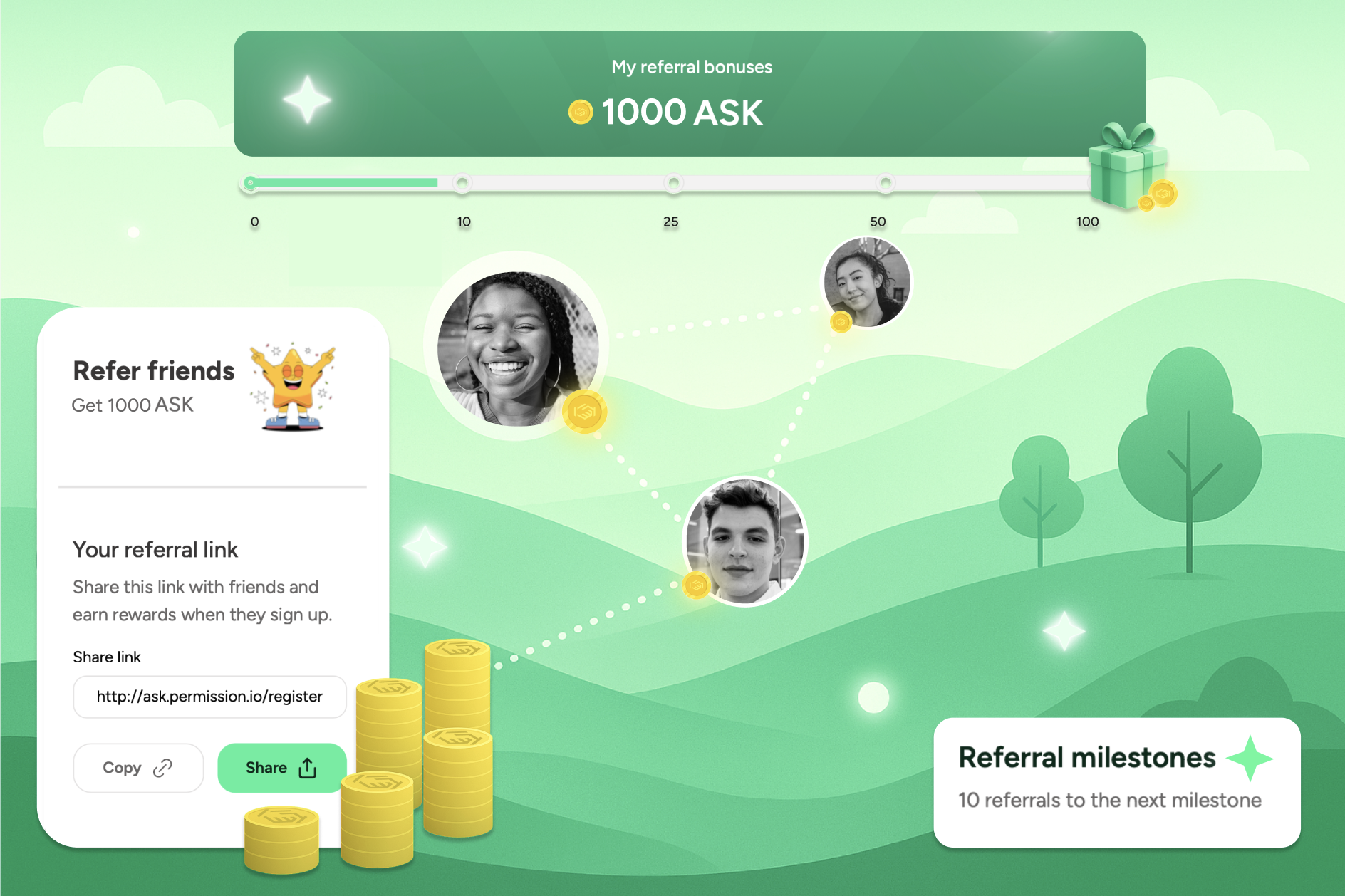

Referrals and Lifetime Rewards

Referrals remain one of the most powerful ways to earn with Permission.

When you refer someone to Permission:

- You earn when they become active

- You continue earning as their activity grows

- You receive ongoing rewards tied to the value created by your referral network

As your referrals use the Permission Agent, it becomes easier for them to discover earning opportunities - and as they earn more, so do you.

Referral rewards operate independently of daily Agent actions, allowing you to build long-term, compounding value.

Learn more here:

👉 Unlock Rewards with the Permission Referral Program

What to Expect Over Time

As the Permission ecosystem grows, earning opportunities will expand.

You can expect:

- New Rewarded Actions and Quick Earns delivered through the Agent

- Campaigns tied to community growth and product launches

- Opportunities ranging from quick wins to more meaningful rewards

Checking in with your Agent regularly is the best way to stay up to date.

Getting Started

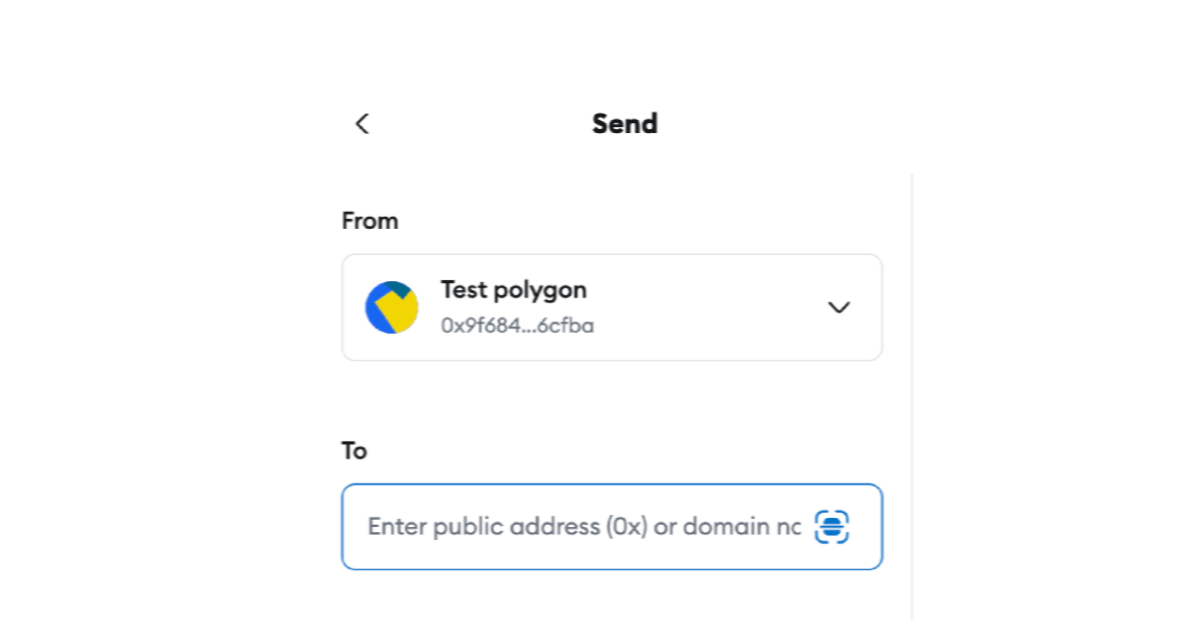

Getting started takes just a few minutes:

- Install the Permission Agent

- Sign in and activate it

- Use the Agent daily to see available Rewarded Actions and Quick Earns

From there, the Agent takes care of the rest - helping you participate, complete actions, and earn ASK over time.

Built for Intentional Participation

Earning with the Permission Agent is designed to be clear, intentional, and sustainable.

Rewards come from choosing to participate, using the Agent regularly, and contributing to the growth of the Permission ecosystem. The Agent makes that participation easy by handling the work - so value flows back to you without unnecessary effort.

2026: The Year of Disruption – Trust Becomes the Most Valuable Commodity

Moore’s Law is still at work, and in many ways it is accelerating.

AI capabilities, autonomous systems, and financial infrastructure are advancing faster than our institutions, norms, and governance frameworks can absorb. For that acceleration to benefit society at a corresponding rate, one thing must develop just as quickly: trust.

2026 will be the year of disruption across markets, government, higher education, and digital life itself. In every one of those domains, trust becomes the premium asset. Not brand trust. Not reputation alone. But verifiable, enforceable, system-level trust.

Here’s what that means in practice.

1. Trust Becomes Transactional, not Symbolic

Trust between agents won’t rely on branding or reputation alone. It will be built on verifiable exchange: who benefits, how value is measured, and whether compensation is enforceable. Trust becomes transparent, auditable, and machine-readable.

2. Agentic Agents Move from Novelty to Infrastructure

Autonomous, goal-driven AI agents will quietly become foundational internet infrastructure. They won’t look like apps or assistants. They will operate continuously, negotiating, executing, and learning across systems on behalf of humans and institutions.

The central challenge will be trust: whether these agents are acting in the interests of the humans, organizations, and societies they represent, and whether that behavior can be verified.

3. Agent-to-Agent Interactions Overtake Human-Initiated Ones

Most digital interactions in 2026 won’t start with a human click. They will start with one agent negotiating with another. Humans move upstream, setting intent and constraints, while agents handle execution. The internet becomes less conversational and more transactional by design.

4. Agent Economies Force Value Exchange to Build Trust

An economy of autonomous agents cannot run on extraction if trust is to exist.

In 2026, value exchange becomes mandatory, not as a monetization tactic, but as a trust-building mechanism. Agents that cannot compensate with money, tokens, or provable reciprocity will be rate-limited, distrusted, or blocked entirely.

“Free” access doesn’t scale in a defended, agent-native internet where trust must be earned, not assumed.

5. AI and Crypto Converge, with Ethereum as the Coordination Layer

AI needs identity, ownership, auditability, and value rails. Crypto provides all four. In 2026, the Ethereum ecosystem emerges as the coordination layer for intelligent systems exchanging value, not because of speculation, but because it solves real structural problems AI cannot solve alone.

6. Smart Contracts Evolve into Living Agreements

Static smart contracts won’t survive an agent-driven economy. In 2026, contracts become adaptive systems, renegotiated in real time as agents perform work, exchange data, and adjust outcomes. Law doesn’t disappear. It becomes dynamic, executable, and continuously enforced.

7. Wall Street Embraces Tokenization

By 2026, Wall Street fully embraces tokenization. Stocks, bonds, options, real estate interests, and other financial instruments move onto programmable rails.

This shift isn’t about ideology. It’s about efficiency, liquidity, and trust through transparency. Tokenization allows ownership, settlement, and compliance to be enforced at the system level rather than through layers of intermediaries.

8. AI-Driven Creative Destruction Accelerates

AI-driven disruption accelerates faster than institutions can adapt. Entire job categories vanish while new ones appear just as quickly.

The defining risk isn’t displacement. It’s erosion of trust in companies, labor markets, and social contracts that fail to keep pace with technological reality. Organizations that acknowledge disruption early retain trust. Those that deny it lose legitimacy.

9. Higher Education Restructures

Higher education undergoes structural change. A $250,000 investment in a four-year degree increasingly looks misaligned with economic reality. Companies begin to abandon degrees as a default requirement.

In their place, trust shifts toward social intelligence, ethics, adaptability, and demonstrated achievement. Proof of capability matters more than pedigree. Continuous learning matters more than static credentials.

Institutions that understand this transition retain relevance. Those that don’t lose trust, and students.

10. Governments Face Disruption From Systems They Don’t Control

AI doesn’t just disrupt industries. It disrupts governance itself. Agent networks ignore borders. AI evolves faster than regulation. Value flows escape traditional jurisdictional controls.

Governments face a fundamental choice: attempt to reassert control, or redesign systems around participation, verification, and trust. In 2026, adaptability becomes a governing advantage.

Conclusion

Moore’s Law hasn’t slowed. It has intensified. But technological acceleration without trust leads to instability, not progress.

2026 will be remembered as the year trust became the scarce asset across markets, government, education, and digital life.

The future isn’t human versus AI.

It’s trust-based systems versus everything else.

Raise Kids Who Understand Data Ownership, Digital Assets, and Online Safety

Online safety for kids has become more complex as AI systems, data tracking, and digital platforms increasingly shape what children see, learn, and engage with.

Parents today are navigating a digital world that looks very different from the one they grew up in.

Families Are Parenting in a World That Has Changed

Kids today don’t just grow up with technology. They grow up inside it.

They learn, socialize, explore identity, and build lifelong habits across apps, games, platforms, and AI-driven systems that operate continuously in the background. At the same time, parents face less visibility, more complexity, and fewer tools that genuinely support understanding without damaging trust.

For many families, this creates ongoing tension:

- conflict around screens

- uncertainty about what actually matters

- fear of missing something important

- a sense that digital life is moving faster than parenting tools have evolved

Research reflects this shift clearly:

- 81% of parents worry their children are being tracked online.

- 72% say AI has made parenting more stressful.

- 60% of teens report using AI tools their parents don’t fully understand.

The digital world has changed parenting. Families need support that reflects this new reality.

The Reality Families Are Facing Online

Online safety today involves far more than blocking content or limiting screen time.

Parents are navigating:

- Constant, multi-platform engagement, where behavior forms across apps, games, and feeds rather than in one place

- Early exposure to adult content, scams, manipulation, and persuasive design, often before kids understand intent or risk

- Mental health and sleep impacts, driven by routines and repetition rather than single moments

- AI-driven systems shaping what kids see, learn, buy, and interact with, often invisibly

- Social media dynamics, where likes, streaks, algorithms, and peer validation shape identity, self-esteem, mood, and behavior in ways that are hard for parents to see or contextualize

For many parents, online safety now includes understanding how algorithms, AI recommendations, and data collection influence children’s behavior over time.

These challenges don’t call for fear or more surveillance. They call for context, guidance, and teaching.

Kids’ First Digital Asset Isn’t Money - It’s Their Data

Every search.

Every click.

Every message.

Every interaction.

Kids begin creating value online long before they understand what value is - or who benefits from it.

Yet research shows:

- Only 18% of teens understand that companies profit from their data.

- 57% of parents say they don’t fully understand how their children’s data is used.

- 52% of parents do not feel equipped to help children navigate AI technology, with only 5% confident in guiding kids on responsible and safe AI use.

Financial literacy still matters. But in today’s digital world, digital literacy is foundational.

Children’s data is often their first digital asset. Their online identity becomes a long-lasting footprint. Learning when and how to share information - and when not to - is now a core life skill.

Why Traditional Online Safety Tools Don’t Go Far Enough

Most parental tools were built for an earlier version of the internet.

They focus on blocking, limiting, and monitoring - approaches that can be useful in specific situations, but often create new problems:

- increased secrecy

- power struggles

- reactive parenting without context

- children feeling managed rather than supported

Control alone doesn’t teach judgment. Monitoring alone doesn’t build trust.

Many parents want tools that help them understand what’s actually happening, so they can respond thoughtfully rather than react emotionally.

A Different Approach to Online Safety

Technology should support parenting, not replace it.

Tools like Permission.ai can help parents see patterns, routines, and meaningful shifts in digital behavior that are difficult to spot otherwise. When digital activity is translated into clear insight instead of raw data, parents are better equipped to guide their kids calmly and confidently.

This approach helps parents:

- notice meaningful changes early

- understand why something may matter

- respond without hovering or prying

Online safety becomes proactive and supportive - not fear-driven or punitive.

Teaching Responsibility as Part of Online Safety

Digital behavior rarely exists in isolation. It develops over time, across routines, interests, moods, and platforms.

Modern online safety works best when parents can:

- explain expectations clearly

- talk through digital choices with confidence

- guide kids toward healthier habits without guessing

Teaching responsibility helps kids build judgment - not just compliance.

Teach. Reward. Connect.

The most effective digital safety tools help families handle online life together.

That means:

- Teaching with insight, not guesswork

- Rewarding positive digital behavior in ways kids understand

- Reducing conflict by strengthening trust and communication

Kids already understand digital rewards through games, points, and credits. When used thoughtfully, reward systems can reinforce responsibility, connect actions to outcomes, and introduce age-appropriate understanding of digital value.

Parents remain in control, while kids gain early literacy in the digital systems shaping their world.

What Peace of Mind Really Means for Parents

Peace of mind doesn’t come from watching everything.

It comes from knowing you’ll notice what matters.

Parents want to feel:

- informed, not overwhelmed

- present, not intrusive

- prepared, not reactive

When tools surface meaningful changes early and reduce unnecessary noise, families can stay steady - even as digital life evolves.

This is peace of mind built on understanding, not fear.

Built for Families - Not Platforms

Online safety should respect families, children, and the role parents play in shaping healthy digital lives.

Parents want to protect without hovering.

They want awareness without prying.

They want help without losing authority.

As the digital world continues to evolve, families deserve tools that grow with them - supporting connection, responsibility, and trust.

The future of online safety isn’t control.

It’s understanding.

California’s SB 243 and the Future of AI Chatbot Safety for Kids

As a mom in San Diego, and someone who works at the intersection of technology, safety, and ethics, I was encouraged to see Governor Gavin Newsom sign Senate Bill 243, California’s first-in-the-nation law regulating companion chatbots. Authored by San Diego’s own Senator Steve Padilla, SB 243 is a landmark step toward ensuring that AI systems interacting with our children are held to basic standards of transparency, responsibility, and care.

This law matters deeply for families like mine. AI is no longer an abstract technological concept; it’s becoming woven into daily life, shaping how young people learn, socialize, ask questions, and seek comfort. And while many AI tools can provide meaningful support, recent tragedies - including the heartbreaking case of a 14-year-old boy whose AI “companion” failed to recognize or respond to signs of suicidal distress - make clear that these systems are not yet equipped to handle emotional vulnerability.

SB 243 sets the first layer of guardrails for a rapidly evolving landscape. But it is only the beginning of a broader shift, one that every parent, policymaker, and technology developer needs to understand.

Why Chatbots Captured Lawmakers’ Attention

AI “companions” are not simple customer-service bots. They simulate empathy, develop personalities, and sustain ongoing conversations that can resemble friendships or even relationships. And they are widely used: nearly 72% of teens have engaged with an AI companion. Early research, including a Stanford study finding that 3% of young adults credited chatbot interactions with interrupting suicidal thoughts, shows their complexity.

But the darker side has generated national attention. Multiple high-profile cases - including lawsuits involving minors who died by suicide after chatbot interactions - prompted congressional hearings, FTC investigations, and testimony from parents who had lost their children. Many of these parents later appeared before state legislatures, including California’s, urging lawmakers to put protections in place.

This context shaped 2025 as the first year in which multiple states introduced or enacted laws specifically targeting companion chatbots, including Utah, Maine, New York, and California. The Future of Privacy Forum’s analysis of these trends can be found in their State AI Report (2025).

SB 243 stands out among these efforts because it explicitly focuses on youth safety, reflecting growing recognition that minors engage with conversational AI in ways that can blur boundaries and amplify emotional risks.

SB 243 Explained: What California Now Requires

SB 243 introduces a framework of disclosures, safety protocols, and youth-focused safeguards. It also grants individuals a private right of action, which has drawn significant attention from technologists and legal experts.

1. What Counts as a “Companion Chatbot”

SB 243 defines a companion chatbot as an AI system designed to:

- provide adaptive, human-like responses

- meet social or emotional needs

- exhibit anthropomorphic features

- sustain a relationship across multiple interactions

Excluded from the definition are bots used solely for:

- customer service

- internal operations

- research

- video games that do not discuss mental health, self-harm, or explicit content

- standalone consumer devices like voice-activated assistants

But even with exclusions, interpretation will be tricky. Does a bot that repeatedly interacts with a customer constitute a “relationship”? What about general-purpose AI systems used for entertainment? SB 243 will require careful legal interpretation as it rolls out.

2. Key Requirements Under SB 243

A. Disclosure Requirements

Operators must provide:

- Clear and conspicuous notice that the user is interacting with AI

- Notice that companion chatbots may not be suitable for minors

Disclosure is required when a reasonable person might think they’re talking to a human.

B. Crisis-Response Safety Protocols

Operators must:

- Prevent generation of content related to suicidal ideation or self-harm

- Redirect users to crisis helplines

- Publicly publish their safety protocols

- Submit annual, non-identifiable reports on crisis referrals to the California Office of Suicide Prevention

C. Minor-Specific Safeguards

When an operator knows a user is a minor, SB 243 requires:

- AI disclosure at the start of the interaction

- A reminder every 3 hours for the minor to take a break

- “Reasonable steps” to prevent sexual or sexually suggestive content

This intersects with California’s new age assurance bill, AB 1043, and creates questions about how operators will determine who is a minor without violating privacy or collecting unnecessary personal information.

D. Private Right of Action

Individuals may sue for:

- At least $1,000 in damages

- Injunctive relief

- Attorney’s fees

This provision gives SB 243 real teeth, and real risks for companies that fail to comply.

How SB 243 Fits Into the Broader U.S. Landscape

While California is the first state to enact youth-focused chatbot protections, it is part of a larger legislative wave.

1. Disclosure Requirements Across States

In 2025, six of seven major chatbot bills across the U.S. required disclosure. But states differ in timing and frequency:

- New York (Artificial Intelligence Companion Models law): disclosure at the start of every session and every 3 hours

- California (SB 243): 3-hour reminders only when the operator knows the user is a minor

- Maine (LD 1727): disclosure required but not time-specified

- Utah (H.B. 452): disclosure before chatbot features are accessed or upon user request

Disclosure has emerged as the baseline governance mechanism: relatively easy to implement, highly visible, and minimally disruptive to innovation.

Of note, Governor Newsom previously vetoed AB 1064, a more restrictive bill that might have functionally banned companion chatbots for minors. His message? The goal is safety, not prohibition.

Taken together, these actions show that California prefers:

- transparency

- crisis protocols

- youth notifications…rather than outright bans.

This philosophy will likely shape legislative debates in 2026.

2. Safety Protocols & Suicide-Risk Mitigation

Only companion chatbot bills - not broader chatbot regulations - include self-harm detection and crisis-response requirements.

However, these provisions raise issues:

- Operators may need to analyze or retain chat logs, increasing privacy risk

- The law requires “evidence-based” detection methods, but without defining the term

- Developers must decide what constitutes a crisis trigger

Ambiguity means compliance could differ dramatically across companies.

The Central Problem: AI That Protects Platforms, Not People

As both a parent and an AI policy advocate, I see SB 243 as progress – but also as a reflection of a deeper issue.

Laws like SB 243 are written to protect people, especially kids and vulnerable users. But the reality is that the AI systems being regulated were never designed around the needs, values, and boundaries of individual families. They were designed around the needs of platforms.

Companion chatbots today are largely engagement engines: systems optimized to keep users talking, coming back, and sharing more. A new report from Common Sense Media, Talk, Trust, and Trade-Offs: How and Why Teens Use AI Companions, found that of the 72% of U.S. teens that have used an AI companion, over half (52%) qualify as regular users - interacting a few times a month or more. A third use them specifically for social interaction and relationships, including emotional support, role-play, friendship, or romantic chats. For many teens, these systems are not a novelty; they are part of their social and emotional landscape.

That wouldn’t be inherently bad if these tools were designed with youth development and family values at the center. But they’re not. Common Sense’s risk assessment of popular AI companions like Character.AI, Nomi, and Replika concluded that these platforms pose “unacceptable risks” to users under 18, easily producing sexual content, stereotypes, and “dangerous advice that, if followed, could have life-threatening or deadly real-world impacts.” Their own terms of service often grant themselves broad, long-term rights over teens’ most intimate conversations, turning vulnerability into data.

This is where we have to be honest: disclosures and warnings alone don’t solve that mismatch. SB 243 and similar laws require “clear and conspicuous” notices that users are talking to AI, reminders every few hours to take a break, and disclaimers that chatbots may not be suitable for minors. Those are important: transparency matters. But, for a 13- or 15-year-old, a disclosure is often just another pop-up to tap through. It doesn’t change the fact that the AI is designed to be endlessly available, validating, and emotionally sticky.

The Common Sense survey shows why that matters. Among teens who use AI companions:

- 33% have chosen to talk to an AI companion instead of a real person about something important or serious.

- 24% have shared personal or private information, like their real name, location, or personal secrets.

- About one-third report feeling uncomfortable with something an AI companion has said or done.

At the same time, the survey indicates that a majority still spend more time with real friends than with AI, and most say human conversations are more satisfying. That nuance is important: teens are not abandoning human relationships wholesale. But, a meaningful minority are using AI as a substitute for real support in moments that matter most.

These same dynamics appear outside the world of chatbots. In our earlier analysis of Roblox’s AI moderation and youth safety challenges, we explored how large-scale platform AI struggles to distinguish between playful behavior, harmful content, and predatory intent, even as parents assume the system “will catch it.”

This is where “AI that protects platforms, not people” comes into focus. When parents and policymakers rely on platform-run AI to “detect” risk, it can create a false sense of security – as if the system will always recognize distress, always escalate appropriately, and always act in the child’s best interest. In practice, these models are tuned to generic safety rules and engagement metrics, not to the lived context of a specific child in a specific family. They don’t know whether your teen is already in therapy, whether your family has certain cultural values, or whether a particular topic is especially triggering.

Put differently: we are asking centralized models to perform a deeply relational role they were never built to handle. And every time a disclosure banner pops up or a three-hour reminder fires, it can look like “safety” without actually addressing the core problem - that the AI has quietly slipped into the space where a parent, counselor, or trusted adult should be.

The result is a structural misalignment:

- Platforms carry legal duties and add compliance layers.

- Teens continue to use AI companions for connection, support, and secrets.

- Parents assume “there must be safeguards” because laws now require them.

But no law can turn a platform-centric system into a family-centric one on its own. That requires a different architecture entirely: one where AI is owned by, aligned to, and accountable to the individual or family it serves, rather than the platform that hosts it.

The Next Phase: Personal AI That Serves Individuals, Not Platforms

Policy can set guardrails, but it cannot engineer empathy.

The future of safety will require personal AI systems that:

- are owned by individuals or families

- understand context, values, and emotional cues

- escalate concerns privately and appropriately

- do not store global chat logs

- do not generalize across millions of users

- protect people, not corporate platforms

Imagine a world where each family has its own AI agent, trained on their communication patterns, norms, and boundaries.An AI partner that can detect distress because it knows the user, not because it is guessing from a database of millions of strangers.

This is the direction in which responsible AI is moving, and it is at the heart of our work at Permission.

What to Expect in 2026

2025 was the first year of targeted chatbot regulation. 2026 may be the year of chatbot governance.

Expect:

- More state-level bills mirroring SB 243

- Increased federal involvement through the proposed GUARD Act

- Sector-specific restrictions on mental health chatbots

- AI oversight frameworks tied to age assurance and data privacy

- Renewed debates around bans vs. transparency-based models

States are beginning to experiment. Some will follow California’s balanced approach. Others may attempt stricter prohibitions. But all share a central concern: the emotional stakes of AI systems that feel conversational.

Closing Thoughts

As a mom here in San Diego, I’m grateful to see our state take this issue seriously. As Permission’s Chief Advocacy Officer, I also see where the next generation of protection must go. SB 243 sets the foundation, but the future will belong to AI that is personal, contextual, and accountable to the people it serves.

ASK Trading and Liquidity are Now Live on Base’s Leading DEX

We’re excited to share that the ASK/USDC liquidity pool is now officially live on Aerodrome Finance, the premier decentralized exchange built on Base. This milestone makes it easier than ever for ASK holders to trade, swap, and provide liquidity directly within the Coinbase ecosystem.

Why This Matters

- More access. You can now trade ASK directly through Aerodrome, Base’s premier DEX—and soon, through the Coinbase app itself, thanks to its new DEX integration.

- More liquidity. ASK liquidity is already live in the USDC/ASK pool, strengthening accessibility for everyone.

- More connection to real utility. As ASK continues to power the Permission ecosystem, this move brings its utility to DeFi, where liquidity meets data ownership + real demand for permissioned data.

How to Join In

- Visit the official USDC/ASK pool on Aerodrome.

- Always confirm the official ASK contract address on Base before trading:

0xBB146326778227A8498b105a18f84E0987A684b4

- You can trade, provide liquidity, or simply watch the pool evolve — it’s all part of growing ASK’s footprint on Base.

Building on Base’s Vision

Base has quickly become one of the most vibrant ecosystems in crypto, driven by the vision that on-chain should be open, affordable, and accessible to everyone. Its rapid growth reflects a broader shift toward usability and real-world applications, something that aligns perfectly with Permission’s mission.

As Coinbase CEO Brian Armstrong has emphasized, Base isn’t just another Layer-2 — it’s the foundation for bringing the next billion users on-chain. ASK’s launch on Base taps directly into that movement, expanding access to a global audience and connecting Permission’s data-ownership mission to one of the most forward-thinking ecosystems in Web3.

100,000+ ASK Holders on Base 🎉

As of this writing, we’re proud to share that ASK has surpassed 100,000 holders on Base. This is a huge milestone that reflects the growing strength and reach of the Permission community.

From early supporters to new users discovering ASK through Base and Aerodrome, this growth underscores the demand for consent-driven data solutions that reward people for the value they create.

Providing Liquidity Has Benefits

When you add liquidity to the USDC/ASK pool, you’re helping deepen the market and improve access for other community members. In return, you’ll earn a share of trading fees generated by the pool.

And as Aerodrome continues to expand its ve(3,3)-style governance model, liquidity providers could see additional incentive opportunities in the future. Nothing is live yet, but the structure is there, and we’re watching closely as the Base DeFi ecosystem evolves.

It’s a great way for long-term ASK supporters to stay engaged and help grow the ecosystem while participating in DeFi on one of crypto’s fastest-growing networks.

What’s Next

ASK’s presence on Base is just the beginning. We’re continuing to build toward broader omnichain accessibility, more liquidity venues, and new ways to earn ASK. Each milestone strengthens ASK’s position as the tokenized reward for permission.

Learn More

Disclaimer:

This post is for informational purposes only and does not constitute financial, investment, or legal advice. Token values can fluctuate and all participation involves risk. Always do your own research before trading or providing liquidity.

Online Safety and the Limits of AI Moderation: What Parents Can Learn from Roblox

Roblox isn’t just a game — it’s a digital playground with tens of millions of daily users, most of them children between 9 and 15 years old.

For many, it’s the first place they build, chat, and explore online. But as with every major platform serving young audiences, keeping that experience safe is a monumental challenge.

Recent lawsuits and law-enforcement reports highlight how complex that challenge has become. Roblox reported more than 13,000 cases of sextortion and child exploitation in 2023 alone — a staggering figure that reflects not negligence, but the sheer scale of what all digital ecosystems now face.

The Industry’s Safety Challenge

Most parents assume Roblox and similar platforms are constantly monitored. In reality, the scale is overwhelming: millions of messages, interactions, and virtual spaces every hour. Even the most advanced AI moderation systems can miss the subtleties of manipulation and coded communication that predators use.

Roblox has publicly committed to safety and continues to invest heavily in AI moderation and human review — efforts that deserve recognition. Yet as independent researcher Ben Simon (“Ruben Sim”) and others have noted, moderation at this scale is an arms race that demands new tools and deeper collaboration across the industry.

By comparison, TikTok employs more than 40,000 human moderators — over ten times Roblox’s reported staff — despite having roughly three times the daily active users. The contrast underscores a reality no platform escapes: AI moderation is essential, but insufficient on its own.

When Games Become Gateways

Children as young as six have encountered inappropriate content, virtual strip clubs, or predatory advances within user-generated spaces. What often begins as a friendly in-game chat can shift into private messages, promises of Robux (Roblox’s digital currency), or requests for photos and money.

And exploitation isn’t always sexual. Many predators use financial manipulation, convincing kids to share account credentials or make in-game purchases on their behalf.

For parents, Roblox’s family-friendly design can create a false sense of security. The lesson is not that Roblox is unsafe, but that no single moderation system can substitute for parental awareness and dialogue.

Even when interactions seem harmless, kids can give away more than they realize.

A name, a birthday, or a photo might seem trivial, but in the wrong hands it can open the door to identity theft.

The Hidden Threat: Child Identity Theft

Indeed, a lesser-known but equally serious risk is identity theft.

When children overshare personal details — their full name, birthdate, school, address, or even family information — online or with strangers, that data can be used to impersonate them.

Because minors rarely have active financial records, child identity theft often goes undetected for years, sometimes until they apply for a driver’s license, a student loan, or their first job. By then, the damage can be profound: financial loss, credit score damage, and emotional stress. Restoring a stolen identity can require years of effort, documentation, and legal action.

The best defense is prevention.

Teach children early why their personal information should never be shared publicly or in private chats — and remind them that real friends never need to know everything about you to play together online.

AI Moderation Needs Human Partnership

AI moderation remains reactive.

Algorithms flag suspicious language, but they can’t interpret tone, hesitation, or the subtle erosion of boundaries that signals grooming.

Predators evolve faster than filters, which means the answer isn’t more AI for the platform, but smarter AI for the family.

The Limits of Centralized AI

The truth is, today’s moderation AI isn’t really designed to protect people; it’s designed to protect platforms. Its job is to reduce liability, flag content, and preserve brand safety at scale. But in doing so, it often treats users as data points, not individuals.

This is the paradox of centralized AI safety: the bigger it gets, the less it understands.

It can process millions of messages a second, but not the intent behind them. It can delete an account in a millisecond, but can’t tell whether it’s protecting a child or punishing a joke.

That’s why the future of safety can’t live inside one corporate algorithm. It has to live with the individual — in personal AI agents that see context, respect consent, and act in the user’s best interest. Instead of a single moderation brain governing millions, every family deserves an AI partner that watches with understanding, not suspicion.

A system that exists to protect them, not the platform.

The Future of Child Safety: Collaboration, Not Competition

The Roblox story underscores an industry-wide truth: safety can’t be one-size-fits-all.

Every child’s online experience is different and protecting it requires both platform vigilance and parent empowerment.

At Permission, we believe the next generation of online safety will come from collaboration, not competition. Instead of replacing platform systems, our personal AI agents complement them — giving parents visibility and peace of mind while supporting the broader ecosystem of trust that companies like Roblox are working to build.

From one-size-fits-all moderation to one-AI-per-family insight — in harmony with the platforms kids already love.

Each family’s AI guardian can learn their child’s unique patterns, highlight potential risks across apps, and summarize activity in clear reports that parents control. That’s what we mean by ethical visibility — insight without invasion.

You can explore this philosophy further in our upcoming piece:

➡️ Monitoring Without Spying: How to Build Digital Trust With Your Child (link coming soon)

What Parents Can Do Now

Until personalized AI guardians are widespread, families can take practical steps today:

- Talk early and often. Make online safety part of everyday conversation.

- Ask, don’t accuse. Curiosity builds trust; interrogation breeds secrecy.

- Play together. Experience games and chat environments firsthand.

- Set boundaries collaboratively. Agree on rules, timing, and social norms.

- Teach red flags. Encourage your child to tell you when something feels wrong — without fear of punishment.

A Shared Responsibility

The recent Roblox lawsuits remind all of us just how complicated parenting in the digital world can feel. It’s not just about rules or apps: it’s about guiding your kids through a space that changes faster than any of us could have imagined!

And the truth is, everyone involved wants the same thing: a digital world where kids can explore safely, confidently, and with the freedom to just be kids.

At Permission, we’re committed to building an AI that understands what matters, respects your family’s values and boundaries, and puts consent at the center of every interaction.

Meet the Permission Agent: The Future of Data Ownership

Update: We’ve since shared more detail on how users earn with the Permission Agent. Read “Share to Earn: How Earning Works with the Permission Agent.”

For years, Permission has championed a simple idea: your data has value, and you deserve to be rewarded for it. Our mission is clear: to enable individuals to own their data and be compensated when it’s used. Until now, we’ve made that possible through our opt-in experience, giving you the choice to engage and earn.

But the internet is evolving, and so are we.

Now, with the rise of AI, our vision has never been more relevant. The world is waking up to the fact that data is the fuel driving digital intelligence, and individuals should be the ones who benefit directly from it.

The time is now. AI has created both the urgency and the infrastructure to finally make our vision real. The solution is the "Permission Agent: The Personal AI that Pays You."

What is the Permission Agent?

The Permission Agent is your own AI-powered digital assistant - it knows you, works for you, and turns your data into a revenue stream.

Running seamlessly in your browser, it manages your consent across the digital world while identifying the moments when your data has value, making sure you are the one who gets rewarded.

In essence, it acts as your personal representative in the online economy, constantly spotting opportunities, securing your rewards, and giving you back control of your digital life.

Human data powers the next generation of AI, and for it to be trusted it must be verified, auditable, and permissioned. Most importantly, it must reward the people who provide it. With the Permission Agent, this vision becomes reality: your data is safeguarded, your consent is respected, and you are compensated every step of the way.

This is more than a seamless way to earn. It’s a bold step toward a future where the internet is rebuilt around trust, transparency, and fairness - with people at the center.

Passive Earning and Compounded Referral Rewards

With the Permission Agent, earning isn’t just smarter - it’s continuous and always working in the background. As you browse normally, your Agent quietly unlocks opportunities and secures rewards on your behalf.

Beyond this passive earning, the value multiplies when you invite friends to Permission. Instead of a one-time referral bonus, you’ll earn a percentage of everything your friends earn, for life. Each time they browse, engage, and collect rewards, you benefit too — and the more friends you bring in, the greater your earnings become.

All rewards are paid in $ASK, the token that powers the Permission ecosystem. Whether you choose to redeem, trade for cash or crypto, or save and accumulate, the more you collect, the more value you unlock.

Changes to Permission Platform

Our mission has always been to create a fair internet - one where people truly own their data and get rewarded for it. The opt-in experience was an important first step, opening the door to a world where individuals could engage and earn. But now it’s time to evolve.

Effective October 1st, the following platform changes will be implemented:

- Branded daily offers will no longer appear in their current form.

- The Earn Marketplace will be transformed into Personalize Your AI - a new way to earn by taking actions that help your Agent better understand you, bringing you even greater personalization and value.

- The browser extension will be the primary surface for earning from your data, and, should you choose to activate passive earning, you’ll benefit from ongoing rewards as your Agent works for you in the background.

With the Permission Agent, you gain a proactive partner that works for you around the clock — unlocking rewards, protecting your data, and ensuring you benefit from every opportunity, without needing to constantly make manual decisions.

How to Get Started

Getting set up takes just a few minutes:

- Download the Permission Agent (browser extension)

- Activate it to claim your ASK token bonus

- Browse as usual — your Agent works in the background to find earning opportunities for you

The more you use it, the more it learns how to unlock rewards and maximize the value of your time online.

A New Era of the Internet

This isn’t just a new tool - it’s a turning point.

The Permission Agent marks the beginning of a digital world where people truly own their data, decide when and how to share it, and are rewarded every step of the way.

Web5 and the Age of AI: Why It’s Time to Own Your Data

The Internet Wasn’t Built for You

The internet has always promised more than it delivered. Web1 gave us access. Web2 gave us interactivity. Web3 introduced decentralization.

But none of them fully delivered on the promise of giving users actual control over their identity and data. Each iteration has made technical strides, but has often traded one form of centralization for another. The early internet was academic and open but difficult to use. Web2 simplified access and enabled user-generated content, but consolidated power within a handful of massive platforms. Web3 attempted to shift control back to individuals, but in many cases it only replaced platform monopolies with protocol monopolies, often steered by investors rather than users.

This brings us to the newest proposal in the evolution of the internet: Web5. It is not simply a new version number. It is an entirely new architecture and a philosophical reset. Web5 is not about adding features to the existing internet. It is about reclaiming its original promise: a digital environment where people are the primary stakeholders and where privacy, data ownership, and user autonomy are fundamental principles rather than afterthoughts.

What Is Web5?

Web5 is a proposed new iteration of the internet that emphasizes user sovereignty, decentralized identity, and data control at the individual level. The term was introduced by TBD, a division of Block (formerly Square), led by Jack Dorsey. The concept merges the usability and familiarity of Web2 with the decentralization aims of Web3, but seeks to go further by eliminating dependencies on centralized platforms, third-party identities, and even the token-centric incentives common in the Web3 space.

At the heart of Web5 is a recognition that true decentralization cannot exist unless individuals can own and manage their identity and data independently of the platforms and applications they use. Web5 imagines a future where your digital identity is yours alone and cannot be revoked, sold, or siloed by anyone else. Your data lives in a secure location you control, and you grant or revoke access to it on your terms.

In essence, Web5 is not about redesigning the internet from scratch. It is about rewriting its relationship with the people who use it.

The Building Blocks of Web5

Web5 is built on several core components that enable a truly user-centric and decentralized experience. These include:

Decentralized Identifiers (DIDs)

DIDs are globally unique identifiers created, owned, and controlled by individuals. Unlike traditional usernames, email addresses, or OAuth logins, DIDs are not tied to any centralized provider. They are cryptographic identities that function independently of any specific platform.

In Web5, your DID serves as your universal passport. You can use it to authenticate yourself across different services without having to create new accounts or hand over personal data to each provider. More importantly, your DID is yours alone. No company or platform can take it away from you, lock you out, or monetize it without your permission.

Verifiable Credentials (VCs)

Verifiable credentials are digitally signed claims about a person or entity. Think of them as secure, cryptographically verifiable versions of driver’s licenses, university degrees, or customer loyalty cards.

These credentials are stored in a user’s own digital wallet and are linked to their DID. They can be presented to other parties as needed, without requiring a centralized intermediary. For example, instead of submitting your passport to a website for identity verification, you could present a VC that confirms your citizenship status or age, verified by an issuer you trust.

This reduces the need for repetitive, invasive data collection and helps prevent identity theft, fraud, and data misuse.

Decentralized Web Nodes (DWNs)

DWNs are user-controlled data stores that operate in a peer-to-peer manner. They serve as both storage and messaging layers, allowing individuals to manage and share their data without relying on centralized cloud infrastructure.

In practice, this means that your messages, files, and personal information live on your own node. Applications can request access to specific data from your DWN, and you decide whether to grant or deny that request. If you stop using the app or no longer trust it, you simply revoke access. Your data stays with you.

DWNs make it possible to separate data from applications. This creates a clear boundary between ownership and access and transforms the way digital services are designed.

Decentralized Web Apps (DWAs)

DWAs are applications that run in a web environment but operate differently than traditional apps. Instead of storing user data in their own back-end infrastructure, DWAs are designed to request and interact with data that resides in a user’s DWN.

This architectural shift changes the power dynamic between users and developers. In Web2, developers collect and control your data. In Web5, they build applications that respond to your data preferences. The app becomes a guest in your ecosystem, not the other way around.

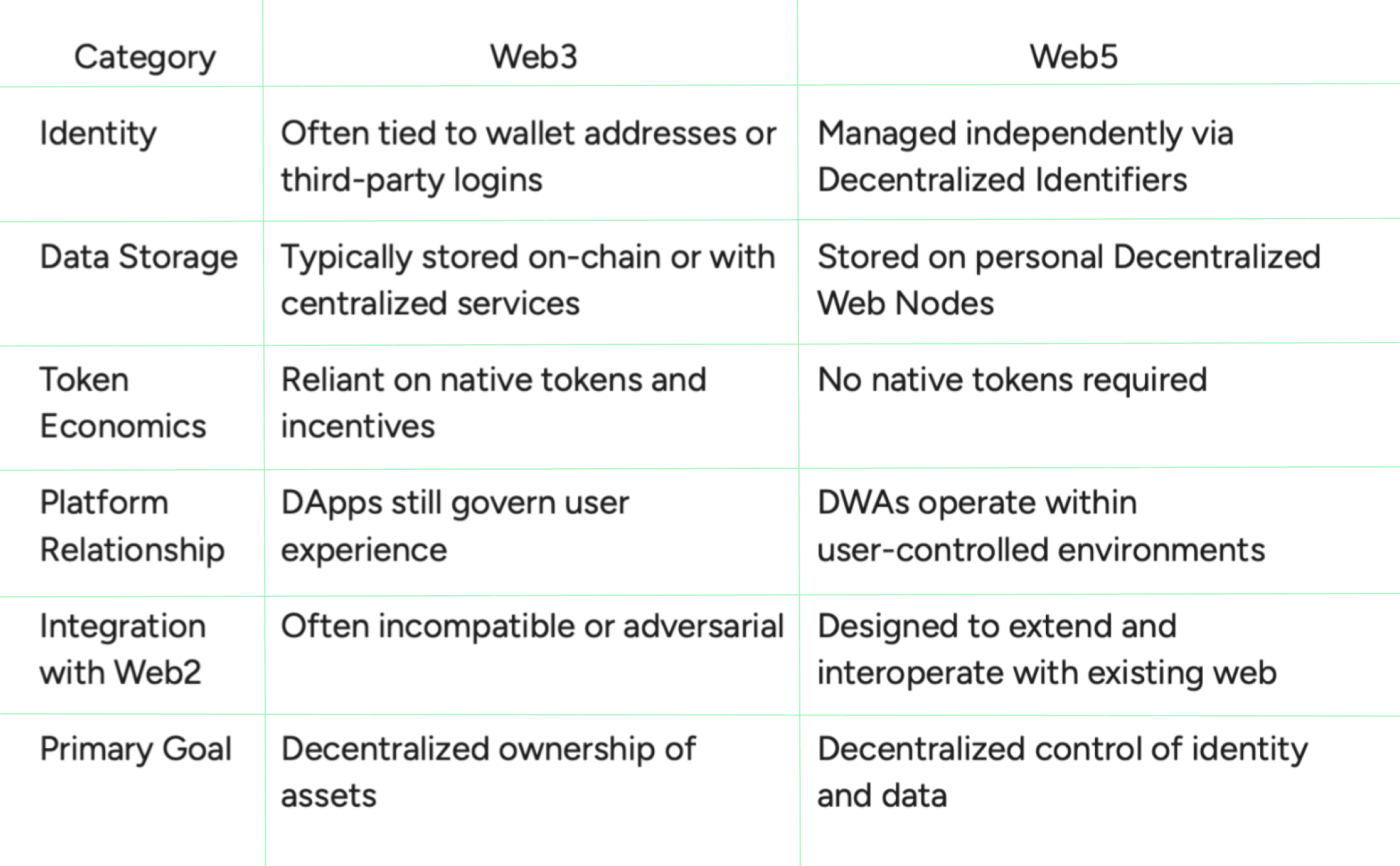

Web5 vs. Web3: A Clearer Distinction

While Web3 and Web5 share some vocabulary, they differ significantly in their goals and structure.

Web3 has been a meaningful step toward decentralization, particularly in finance and asset ownership. However, it often recreates centralization through the influence of early investors, reliance on large protocols, and opaque governance structures. Web5 aims to eliminate these dependencies altogether.

Why Web5 Matters in a Post-Privacy Era

Data privacy is no longer a niche concern. It is a mainstream issue affecting billions of people. From the fallout of the Cambridge Analytica scandal to the enactment of global privacy regulations like GDPR and CPRA, there is a growing consensus that the existing digital model is broken.

Web5 does not wait for regulatory pressure to enforce ethical practices. It bakes them into the infrastructure. By placing individuals at the center of data ownership and removing the need for constant surveillance-based monetization, Web5 allows for the creation of a digital ecosystem that respects boundaries, preferences, and consent by design.

In a world where AI is increasingly powered by massive data collection, Web5 offers a powerful counterbalance. It allows individuals to decide whether their data is included in training models, marketing campaigns, or platform personalization strategies.

How AI Supercharges the Promise of Web5

Artificial intelligence is rapidly reshaping every part of the internet — from the way content is generated to how decisions are made about what we see, buy, and believe. But the power behind AI doesn’t come from the models themselves. It comes from the data they’re trained on.

Today, that data is often taken without consent. Every click, view, scroll, and purchase becomes raw material for algorithms, enriching platforms while users are left with no control and no compensation.

This is where Web5 comes in.

By combining the decentralization goals of Web3 with the intelligence of AI, Web5 offers a blueprint for a more ethical digital future — one where individuals decide how their data is used, who can access it, and whether it should train an AI at all. In a Web5 world, your data lives in your own vault, tied to your decentralized identity. You can choose to share it, restrict it, or even monetize it.

That’s the real promise: an internet that respects your privacy and pays you for your data.

Rather than resisting AI, Web5 gives us a way to integrate it responsibly. It ensures that intelligence doesn’t come at the cost of autonomy — and that the next era of the internet is built around consent, not extraction.

The Role of Permission.io in the Web5 Movement

At Permission.io, we have always believed that individuals should benefit from the value their data creates. Our platform is built around the idea of earning through consent. Web5 provides the technological framework that aligns perfectly with this philosophy.

We do not believe that privacy and innovation are mutually exclusive. Instead, we believe that ethical data practices are the foundation of a more effective, sustainable, and human-centered internet. That is why our $ASK token allows users to earn rewards for data sharing in a transparent, voluntary manner.

As Web5 standards evolve, we will continue to integrate its principles into our ecosystem. Whether through decentralized identity, personal data vaults, or privacy-first interfaces, Permission.io will remain at the forefront of giving users control and compensation in a world driven by AI and data.

Conclusion: The Internet Is Growing Up

The internet is entering its fourth decade. Its adolescence was defined by explosive growth, centralization, and profit-first platforms. Its adulthood must be defined by ethics, sovereignty, and resilience.

Web5 is not just a concept. It is a movement toward restoring balance between platforms and people. It challenges developers to build differently. It invites users to reclaim their autonomy. And it sets a precedent for how we should think about identity, ownership, and trust in a digitally saturated world.

Web5 is not inevitable. It is a choice. But it is a choice that more people are ready to make.

Own Your Data. Build the Future.

Permission.io is proud to be a participant in the new internet—one where you are not the product, but the owner. If you believe that the future of the internet should be user-driven, privacy-first, and reward-based, you are in the right place.

Start earning with Permission.

Protect your identity.

Take control of your data in Web5 and the age of AI.

AI Has a Data Problem. Identic AI Has the Fix.

Artificial Intelligence is advancing faster than anyone imagined. But underneath the innovation lies a fundamental problem: it runs on stolen data.

Your personal searches, clicks, purchases, and habits have been quietly scraped, repackaged, and monetized, all without your consent. Big Tech built today’s most powerful AI systems on a mountain of behavioral data that users never agreed to give. It’s efficient, yes. But it’s also broken.

Identic AI offers a new path. A vision of artificial intelligence that doesn’t exploit you, but respects you. One where privacy, accuracy, and transparency aren’t afterthoughts…they’re the foundation.

The Current Landscape of AI

AI is reshaping industries at breakneck speed. From advertising to healthcare to finance, algorithms are optimizing everything, including targeting, diagnostics, forecasting, and more. We are witnessing smarter search, personalized shopping, and hyper-automated digital experiences.

But what powers all of this intelligence? The answer is simple: data. Every interaction, swipe, and search adds fuel to the machine. The smarter AI gets, the more it demands. And that’s where the cracks begin to show.

The Data Problem in AI

Most of today’s AI models are trained on data that was never truly given. It is scraped from websites, logged from apps, and extracted from your online behavior without explicit consent. Then it is bought, sold, and resold with zero transparency and zero benefit to the person who created it.

This system isn’t just flawed; it is exploitative. The very people generating the data are left out of the value chain. Their information powers billion-dollar innovations, while they are kept in the dark.

Identic AI: A New Paradigm for Ethical AI

Identic AI is a concept that reimagines the foundation of artificial intelligence. Instead of running on unconsented data, it operates on permissioned information, which is data that users have explicitly agreed to share.

It’s powered by zero-party data, voluntarily and transparently contributed by individuals. This creates not only a more ethical system, but a smarter one. Data shared intentionally is often more accurate, more contextual, and more valuable.

Identic AI ensures transparency from end to end. Users know exactly what they’re sharing, how it’s being used, and what they gain in return.

How Identic AI Solves Major AI Challenges

Privacy Compliance

Identic AI is designed to align with global privacy laws like GDPR and CCPA. Instead of retrofitting compliance, it begins with consent by default.

Trust and Transparency

It eliminates the "black box" dynamic. Users can see how their data is used to train and fuel AI models, which restores confidence in the process.

Data Accuracy

Willingly shared data is more reliable. When users understand the purpose, they provide better inputs, which leads to better outputs.

Fair Compensation

Identic AI proposes a model where data contributors are no longer invisible. They are participants, and they are rewarded for their contributions.

The Future with Identic AI

Imagine a digital world where every interaction is a clear value exchange. Where people aren't just data points but stakeholders. Where AI systems respect boundaries instead of bypassing them.

Identic AI sets the precedent for this future. It proves that artificial intelligence can be powerful without being predatory. Performance and ethics are not mutually exclusive; they are mutually reinforcing.

How Permission Powers the Identic AI Movement

At Permission.io, we’re building the infrastructure to bring this model to life. Our platform enables users to earn ASK tokens in exchange for sharing data, with full knowledge, full control, and full transparency.

We’re laying the groundwork for AI systems that run on consent, not coercion. Our mission is to create a more equitable internet, where users don’t just use technology. They benefit from it.

Your Data. Your Terms. Your Share of the AI Economy.

If you’re tired of giving your data away for free, join a platform that puts you back in control.

Sign up at Permission.ai and start earning with every click, every search, and every insight you choose to share.

Unlock the Power of ASK: How to Earn, Share, and Multiply Your Rewards

We are thrilled to announce Permission's new and generous referral program is now live, effective May 15, 2025.

At Permission, we believe your data is extremely valuable—and YOU should be rewarded when you share it. ASK is the fuel that powers your journey in the Permission ecosystem. As a Permission user, you’re not just taking back control of your data—you’re owning it and earning real rewards every step of the way.

Every time you show up, take action, or invite friends into Permission, you collect ASK tokens. These tokens track your contributions and grow your stake in a fair and transparent digital economy.

Think of ASK as your digital footprint. The more active you are—and the more friends you invite—the more you stack your ASK.

What Are ASK Tokens?

- Sign Up and Verify: When you create and verify your Permission account, you earn ASK instantly.

- Opt In to Share Your Data: Choose which data you share. Each time you participate in opt-in campaigns—whether completing a survey or engaging with a brand—you earn ASK based on the value of that data.

- Refer Friends Who Sign Up and Verify: Share your unique referral link and earn ASK when your friends join Permission.

- Earn on Your Referrals' Data Sharing Activities: When your direct referrals participate in data-sharing campaigns, you earn 10% of the ASK they earn—passive income generated simply by expanding your network.

- Earn When Your Referrals Grow Their Network: When one of your direct referrals hits 10 successful referrals, you receive a one-time bonus of 10,000 ASK—and your referral earns 5,000 ASK for expanding the network.

The number of tokens you collect depends on how active you are and how big your referral network becomes. Every interaction adds to your ASK stack, growing your earnings with each new connection.

Invite and Earn More ASK

Inviting friends and family is where the exponential potential lies. Here is how our active Permission Referral Network works:

- Your invitee signs up and verifies their account.

- They receive their full signup reward immediately.

- You receive a signup bonus and 10 percent of any ASK they earn from data-sharing and campaigns.

There is no limit to the number of people you can invite. Each new verified user becomes a source of ongoing rewards.

Milestone Bonuses

We reward sustained growth. As your direct referrals accumulate, you unlock milestone bonuses:

- 5 Referrals: Receive a one-time bonus of 5,000 ASK.

- 10 Referrals: Receive a one-time bonus of 10,000 ASK.

- Referral Milestones: When one of your direct referrals hits 10 successful referrals, you receive a one-time bonus of 10,000 ASK—and your referral earns 5,000 ASK for expanding the network.

Top referrers gain early access to special earning opportunities and beta features.

How It Works in Practice

Your referral earnings are calculated and disbursed once a month to keep things predictable. Here is the timeline:

- By the end of each month: We tally all new direct referrals who have signed up, verified, and engaged.

- On the 15th of the next month: We deposit your 10 percent of their earned ASK and any milestone rewards directly into your Permission wallet.

- Dashboard Access: You can view detailed referral and bonus history on your dashboard at any time.

Real-World Examples

Example 1: Alex's Growth Story

Alex invites Jamie to Permission. Jamie signs up and verifies her account, earning 1,000 ASK. Alex also receives 1,000 ASK as a signup bonus. Over the next month, Jamie shares her data and completes campaigns, earning 5,000 ASK. Alex gets 10%, receiving 500 ASK automatically.

The next month, Jamie refers her friend Morgan, who signs up, verifies, and starts earning ASK. Because Morgan is part of Jamie’s network, Alex earns another 10% of Morgan’s ASK activity, all without lifting a finger.

By the end of three months, Alex has earned 1,000 ASK (signup), 500 ASK (Jamie's activity), and 200 ASK (Morgan's activity) — 1,700 ASK in total from just one initial referral.

Bonus Time: In Month 4, Jamie reaches 10 successful referrals. Alex receives a 10,000 ASK bonus, and Jamie earns 5,000 ASK as a reward for building her network.

Example 2: Building a Passive ASK Tree

Imagine Sarah refers five friends, each of whom verifies and starts earning ASK. Sarah receives 1,000 ASK per friend, totaling 5,000 ASK. Now, those friends begin referring others. Sarah not only receives 10% of her direct referrals' earnings but also hits the Milestone Bonus for 5 referrals, unlocking an additional 5,000 ASK.

Sarah’s network continues to grow, each branch feeding back into her ASK balance. This is the true power of exponential growth—small actions that multiply into real, ongoing rewards.

The Power of Quality Over Quantity

The real secret to maximizing your ASK earnings isn’t just about bringing in tons of people—it’s about bringing in the right people. High-quality, engaged users mean more data sharing, more opt-ins, and more ASK flowing back to you.

Invite friends, colleagues, family—anyone who wants to take control of their data and earn along the way. One good referral can spark a whole network of growth.

Why This Matters: Passive Earnings and Data Monetization

When brands use that zero-party data for targeted marketing, or when it’s fed into AI models to power smarter campaigns, you earn even more ASK.

Every time your data is used in an AI marketing campaign, you get paid. And when your referrals permission their data and it’s leveraged in targeted advertising, YOU EARN TOO.

Stack Your ASK: More Data, More Earnings

One of the biggest opportunities within the Permission ecosystem is the ability to stack your ASK. The more data you share—on your terms—the more you earn.

- Wallet connections: Share on-chain data for deeper insights and earn more.

- Survey participation: Answer questions about your preferences and earn additional ASK.

- Permission Connect: Opt into branded offers and get rewarded instantly.

- AI-Powered Campaigns: When your data is fed into AI models for targeted marketing, you continue to earn ASK.

Every click, every share, and every opt-in that happens in your network stacks your ASK. And when you invite others, that stack only grows. It’s not just about earning—it’s about building a foundation for passive income that lasts.

Get Ready. Start Earning. Own Your Data.

This is just the beginning. The Permission Referral Network will change how you think about earning online. Don’t just watch it happen—be a part of it.

Getting Started Is Simple

- Verify your account today to claim your first ASK.

- Copy your referral link from the dashboard.

- Share it with friends, family, and colleagues.

- Watch your network grow and check your wallet on the 15th of each month for bonus deposits.

There’s no ceiling on what you can earn. Build your referral tree, engage with offers, and let ASK work for you—month after month.

Join the Permission Referral Network today. Share your link, build your network, and multiply your rewards.

For more information about eligibility and other Referral Program details, please refer to our Terms of Use.

Data Ownership in the Age of AI: How Permission is Pioneering Data Monetization

The rise of generative AI models like ChatGPT, Google Bard, and others has sparked a critical dialogue around data privacy and the ethics of large-scale data collection. Recently, regulatory crackdowns and high-profile lawsuits have highlighted a growing awareness: individuals deserve control—and compensation—for the use of their personal data in AI training and digital advertising. Permission.io is stepping up to meet this moment, empowering users to take back ownership of their data and earn for its use.

The Problem: Your Data, Their Profit

Major tech companies have built their empires on the back of user-generated data. For years, every click, search, and online interaction has been quietly harvested, aggregated, and monetized—without user consent or compensation. In the context of AI, this data is even more valuable. Large Language Models (LLMs) like those developed by OpenAI, Google, and others require enormous datasets to improve their accuracy and responsiveness. But whose data is it? And who profits from its use?